AI Safety Summit: Cracking the code of risk mitigation

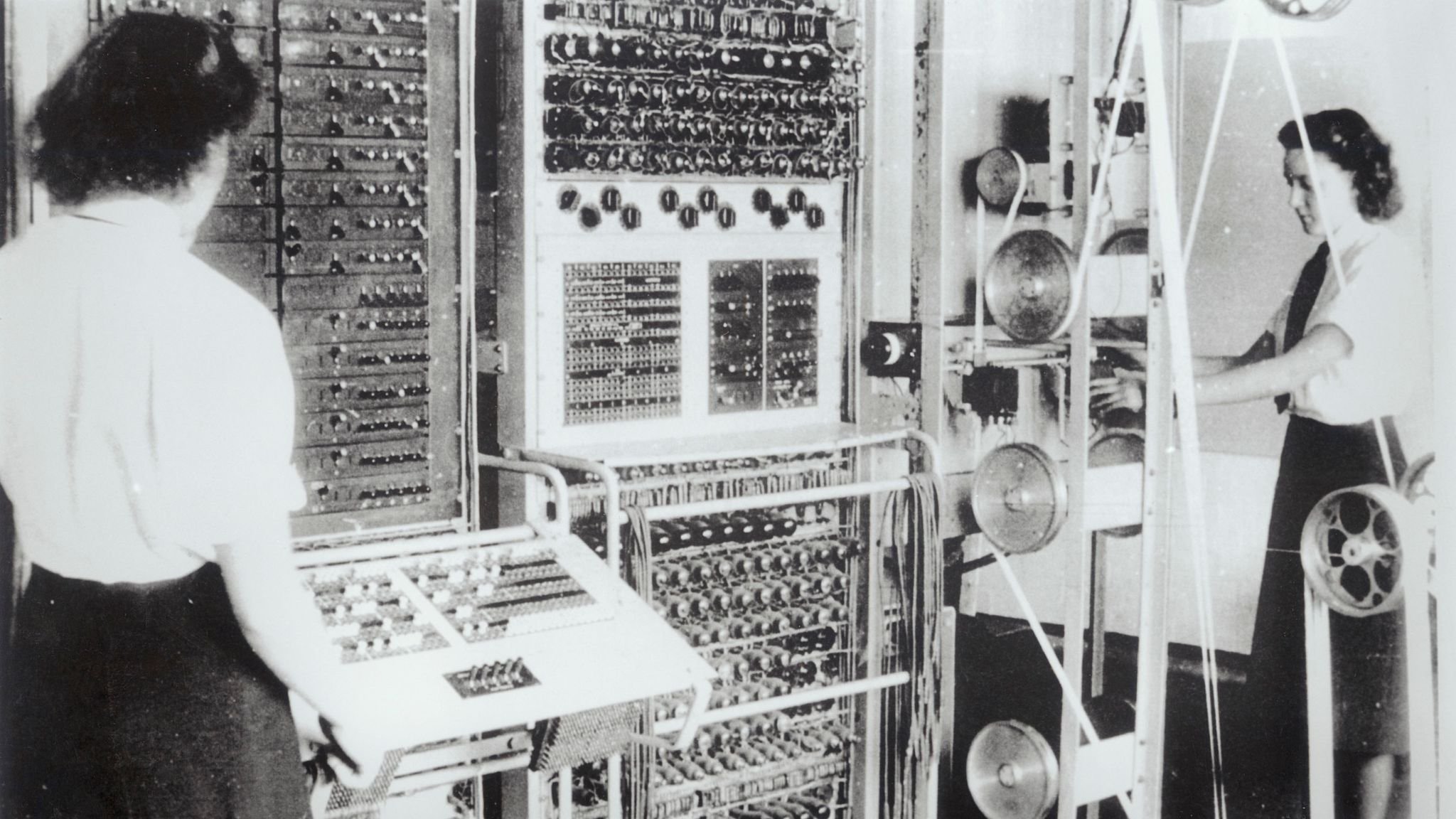

The legacy of Bletchley Park lives on.

This week, Tristan and Tasia unpack the highlights and key outcomes from the UK's recent AI Safety Summit, where global leaders, tech giants, and AI researchers convened to forge the Bletchley Declaration. We dive into the debate over open-source AI, the commitments from major tech companies to preemptively test AI products, and the challenges of aligning international AI regulations, particularly around frontier AI. Join us as we discuss whether these collaborative efforts to secure a safe future with AI will actually be effective, given various competing geopolitical priorities and relentless technological advancements. Also: another Microsoft AI whoopsie-doopsie. 😬

LISTEN

FOLLOW

AI Named This Show on Facebook, Instagram, YouTube and X (Twitter)

AI Named This Show podcast on Acast, Amazon Music, Apple Podcasts, Google Podcasts, iHeart or Spotify

FOLLOW-UP

AI NEWS

AI SAFETY SUMMIT

World leaders are gathering at the U.K.’s AI Summit. Doom is on the agenda.

The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023

Analysis: AI summit a start but global agreement a distant hope

Why China’s Involvement in the U.K. AI Safety Summit Was So Significant

Bletchley Park: Five facts about the UK's AI Safety Summit venue

AI SAFETY PRIMERS